With the increasing popularity of Apache Kafka as a distributed streaming platform, ensuring its high availability has become a priority for businesses. The efficient management of Kafka clusters plays a significant role in maintaining a reliable and uninterrupted streaming infrastructure.

In this article, we will explore the best practices for Kafka management that will help organizations achieve robustness and seamless performance.

Understanding Kafka topics, partitions, and replication

Apache Kafka operates on a publish-subscribe messaging system, organizing data into topics. Each topic is further divided into partitions, allowing for parallel processing and scalability. Understanding the concept of topics, partitions, and replication is crucial for effective Kafka management.

Kafka replication ensures fault tolerance by creating multiple copies of data across different brokers. By replicating data, Kafka provides redundancy and allows for automatic failover in the event of a broker failure. A minimum replication factor of three is recommended to ensure durability and availability in case of failures. Additionally, partitioning data across multiple brokers provides load balancing and efficient resource utilization.

Proper management of Kafka topics, partitions, and replication is essential for high availability and fault tolerance. By distributing data across multiple brokers and ensuring replication, organizations can maintain uninterrupted access to data even in the face of failures or system outages. Implementing monitoring and alerting mechanisms can help administrators proactively identify and resolve any issues related to topics, partitions, or replication.

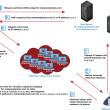

High availability and fault tolerance in Kafka clusters

High availability and fault tolerance are critical aspects of Kafka management. By implementing certain strategies and best practices, organizations can ensure that their Kafka clusters remain highly available even during unexpected failures or disruptions.

One key practice is setting up Kafka clusters across multiple data centers or availability zones. By distributing the clusters geographically, organizations can mitigate the risk of complete data loss in case of a disaster or a data center outage. Additionally, organizations should consider using Kafka’s built-in features, such as mirror maker or replication, to replicate data across different clusters, further enhancing fault tolerance.

Organizations should also implement proper monitoring and alerting mechanisms to achieve high availability. This includes monitoring the health and performance of Kafka brokers, producers, consumers, and overall cluster metrics. By closely monitoring key metrics such as message throughput, latency, and consumer lag, administrators can identify any potential issues early on and take appropriate actions to prevent disruptions.

Kafka management best practices for ensuring high availability

Ensuring the high availability of Kafka clusters requires adherence to certain best practices. By following these practices, organizations can minimize downtime, mitigate data loss, and ensure the continuous availability of Kafka clusters.

Firstly, organizations should regularly monitor and maintain the hardware and infrastructure on which Kafka clusters are running. This includes monitoring CPU, memory, and disk utilization, as well as network bandwidth. By proactively addressing any hardware or infrastructure bottlenecks, organizations can prevent performance degradation and maintain high availability.

Secondly, organizations should implement proper load-balancing techniques to distribute the workload evenly across Kafka brokers. This includes using tools like Apache ZooKeeper to manage consumer group coordination and rebalancing. Organizations can prevent any single broker from becoming a bottleneck and maintain high availability and performance by ensuring that the load is distributed evenly.

Thirdly, organizations should regularly review and tune Kafka configurations for optimal performance and reliability. This includes adjusting parameters such as replication factor, batch size, and message retention policies based on the application’s specific requirements and workload. By fine-tuning these configurations, organizations can optimize resource utilization, reduce latency, and improve the overall performance of Kafka clusters.

Monitoring Kafka clusters for performance and availability

Monitoring Kafka clusters is essential to ensure their optimal performance and availability. By closely monitoring key metrics and setting up alerts, administrators can proactively identify and resolve any issues that may impact the performance or availability of Kafka clusters.

Monitoring tools such as Apache Kafka Manager, Confluent Control Center, or third-party solutions like Prometheus and Grafana can provide valuable insights into the health and performance of Kafka clusters. These tools enable administrators to monitor key metrics such as message throughput, latency, disk utilization, and broker availability.

In addition to monitoring cluster metrics, organizations should also monitor individual producers’ and consumers’ health and performance. This includes monitoring the rate of produced and consumed messages, consumer lag, and any potential bottlenecks or issues with specific producers or consumers.

Administrators can be notified of any abnormal behaviour or performance degradation by setting up alerts based on predefined thresholds. This allows them to take immediate action, such as scaling up resources, rebalancing partitions, or investigating potential issues, ensuring the continuous availability and optimal performance of Kafka clusters.

Kafka cluster capacity planning and scaling

Proper capacity planning and scaling are essential for maintaining high availability and performance in Kafka clusters. By accurately estimating the required resources and scaling the clusters accordingly, organizations can ensure that Kafka can handle the expected workload without any disruptions.

Capacity planning involves analyzing historical data and predicting future growth to determine the required resources, such as CPU, memory, and disk space. When planning the capacity of Kafka clusters, it is important to consider factors such as message throughput, retention policies, and expected data growth.

Scaling Kafka clusters can be done horizontally by adding more brokers or vertically by increasing the resources allocated to each broker. When scaling horizontally, it is important to ensure proper load balancing and data distribution across the new and existing brokers. This can be achieved by using tools like Apache ZooKeeper to manage the coordination and rebalancing of partitions.

Organizations should also regularly review and adjust the capacity of Kafka clusters based on changing workloads and requirements. This includes monitoring resource utilization and performance metrics and scaling up or down as needed to maintain high availability and optimal performance.

Configuring Kafka for optimal performance and reliability

Configuring Kafka properly is crucial for achieving optimal performance and reliability. By fine-tuning various parameters and configurations, organizations can ensure that Kafka clusters operate efficiently and reliably under high-load scenarios.

One key configuration to consider is the replication factor. By setting a higher replication factor, organizations can ensure data durability and fault tolerance. However, a higher replication factor also increases the storage and network overhead. Therefore, it is important to strike a balance between durability and resource utilization based on the application’s specific requirements.

Another important configuration is the batch size, which determines the number of messages that Kafka produces or consumes in a single batch. Larger batch size can improve throughput but may increase latency. It is important to find the optimal batch size that balances throughput and latency based on the workload and application requirements.

Organizations should also consider configuring proper message retention policies to ensure data is retained for the required duration. This includes setting the retention period and configuring the cleanup policies to remove expired data. By properly configuring retention policies, organizations can optimize storage utilization and ensure that data is available for the required duration.

Conclusion and key takeaways

In conclusion, efficient management of Kafka clusters ensures high availability and reliable performance. By following the best practices discussed in this article, organizations can optimize their Kafka management techniques and enhance the reliability of their streaming architecture.