Many years ago, one of the organizations that I worked with dealt with shipments and warehouse-related processing logic, and primarily relied on a central SQL database to power more than a dozen teams in our organization.

Instead of trying to understand the intricacies of that business which many folks won’t associate with, we will use a simplified payment processing system that processes travel bookings (e.g. air travel, museum tickets, hotel booking etc) to understand the problem that we dealt with; and how we resolved the scaling bottleneck before it completely broke down due to overload. We will look at some important learnings that should be broadly applicable to similar systems relying on SQL databases.

Background:

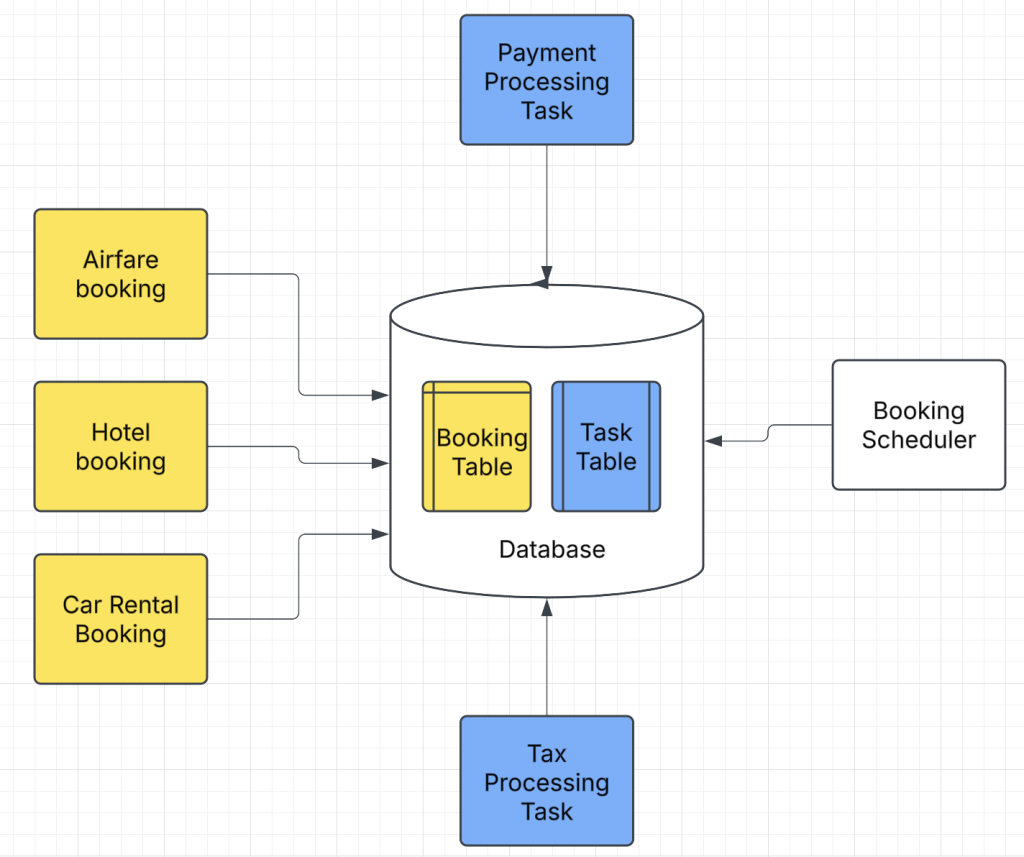

Before we begin, I want to point out that there are specific systems where SQL databases are necessary and can’t be replaced. However, for many use cases, the advanced capability of Atomicity, Consistency, Isolation and Durability (ACID property) provided by SQL can be relaxed which improves the performance and scaling profile of the overall application. Let us begin by looking at the below architecture for payment processing:

- There are different systems that handle different types of bookings e.g. Airfare booking, hotel booking, car rental booking. The booking data was written to the Booking table.

- The Booking Scheduler service ran every few seconds checking for new bookings, and created new tasks required for every booking e.g. Payment Processing Task, Tax Processing Task etc. We have shown just 2 tasks above but in our system, dozens of tasks were created for each new booking or if a booking reservation was updated.

- Payment Processing Task and Tax Processing tasks executed their respective business logic for a specific booking and went through phases of CREATED, INPROGRESS, DONE, ERRORED, and WAITING.

Problem:

This system ran quite well for 10+ years but we were quickly reaching breaking point as our business grew and traffic grew with it.

Issues of this architecture system:

- Maintenance: Regular DB maintenance by Database Administrators (DBA) created an outage of several minutes for all teams. In the past, we also encountered a situation where manual mistakes by the DBAs led to an outage for several hours. We patched this by implementing a new process on how manual actions were performed. Nevertheless, there was still a large impact radius due to human errors if a specific process was not followed by someone new on the DBA team.

- Security risk: Shared DB tables were a security risk as different teams had access to entire data when only specific items were of interest to them.

- Agility issues: Updating table schema required approvals from all teams which slowed down the development process.

- Performance issues: The table had locking contention since multiple teams handled transactions on table entries. The database CPU could spike if one of the teams wrote a complicated query on the table and this problem became more complex with time.

Some astute readers might have already noticed that the database was serving as a scheduler based on new bookings or updated bookings. It would also track the progress of follow-up tasks that were created for each booking.

As a first step, we went through the discussion of having an orchestrator (e.g. a workflow engine), that coordinates the sequence of tasks for every booking. However, one downside of this architecture was that there was still a need for a central coordinating team and the owner of the workflow had to ensure new tasks for failures in specific steps needed coordination between the teams. We wanted to adopt a more agile architecture that could allow us to add more tasks for every booking (e.g. publish to data warehouse etc.) in future without the need of coordinating with a central team.

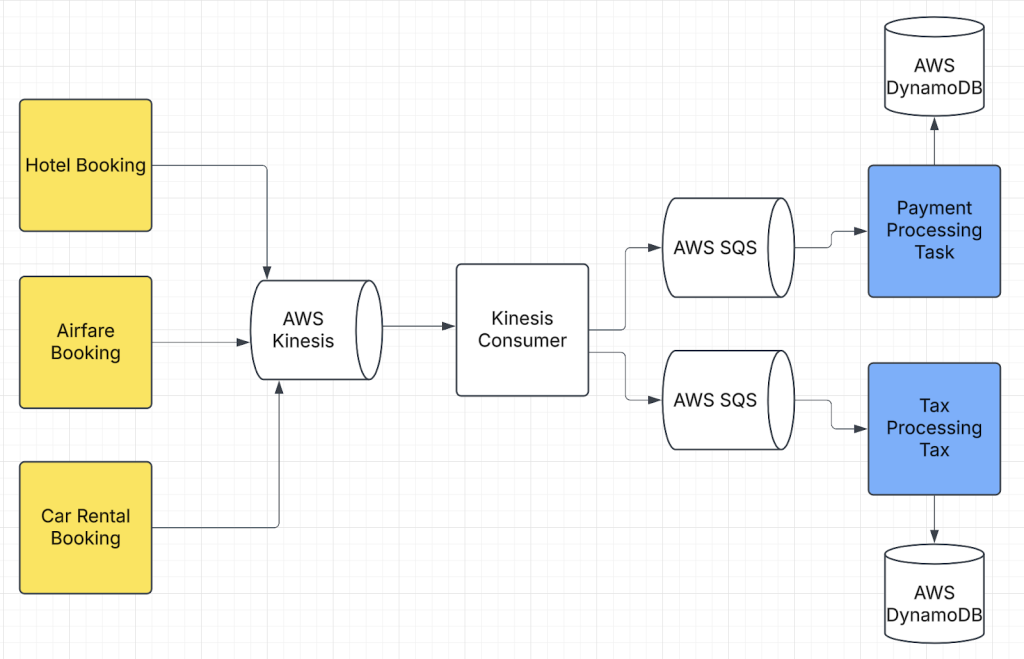

After a few iterations, we adopted an event-based architecture using fully managed solutions from AWS like Kinesis, SQS and DDB. The new architecture looked as follows.

- The sources of booking push the booking details to the AWS Kinesis queue. Kinesis is similar to Kafka but is a fully managed solution from AWS.

- A Kinesis consumer reads the data from Kinesis shards (shards are similar to partitions in Kafka world) and creates events for respective tasks to individual SQS queues.

- The SQS consumers (i.e. Payment Processing Task etc.) consume events for every booking and execute specific logic required for every booking.

If you note, we ended up using a DynamoDB with SQS consumers. The reason for this was that in rare scenarios, SQS can deliver the same message twice. We didn’t want to execute the payment processing task twice. To get around the situation, we ended up using locking with DDB for a given bookingID.

Not an ideal solution but it worked really well and did not interfere with the scaling characteristics that we were looking for. We could have used Kinesis instead of SQS here, but the problem with Kinesis was that it only processes records for a given shard and we needed high throughput from these task queues since these tasks from different bookingIDs don’t need to be processed serially and can be processed in parallel.

We could have achieved more parallelism with Kinesis by using more shards, but Kinesis charges at a shard level. When we compared the cost of using Kinesis vs SQS + DDB, the latter proved to be a better solution from a throughput and cost perspective.

This architecture also provided a better boundary of ownership between teams compared to before where ownership was split on common code bases. We could have further simplified the Kinesis consumer-related maintenance by using AWS Lambda with Kinesis instead of a Kinesis consumer that ran on our EC2 instances.

Conclusion:

While SQL databases are extremely powerful, we unintentionally ended up incorrectly using some of SQL databases’ capabilities which led to a situation where we started seeing performance, agility and security risks. This is what happens when business is growing too fast, available resources are falling behind and not enough time is spent analyzing overall architecture.

By shifting our approach to an event-based architecture, we were not only able to get around the scaling issue but also ended up separating ownership boundaries that allowed for faster iteration of products in future. The last time I checked, the system added another dozen tasks without any scaling issues which to me was a success story in itself.

Top/Featured Image via Pixabay/Tarry_not