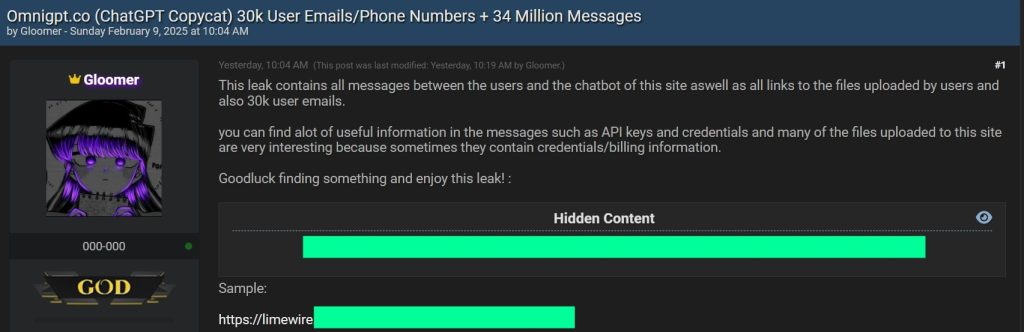

A hacker claims to have breached OmniGPT, a popular AI-powered chatbot and productivity platform, exposing 30,000 user emails, phone numbers, and more than 34 million (34,270,455) lines of user conversations. The data was published on Breach Forums on Sunday at 10:04 AM by a hacker using the alias “Gloomer.”

How Does OmniGPT Work

OmniGPT integrates various advanced language models into a single interface, including ChatGPT-4, Claude 3.5, Perplexity, Google Gemini, and Midjourney. Designed to enhance efficiency, it offers features such as data encryption, team collaboration tools, document management, image analysis, and WhatsApp integration. Pricing plans start from a free tier with basic features to a Plus plan at $16 per month, providing access to more advanced models and functionalities.

The Leaked Data

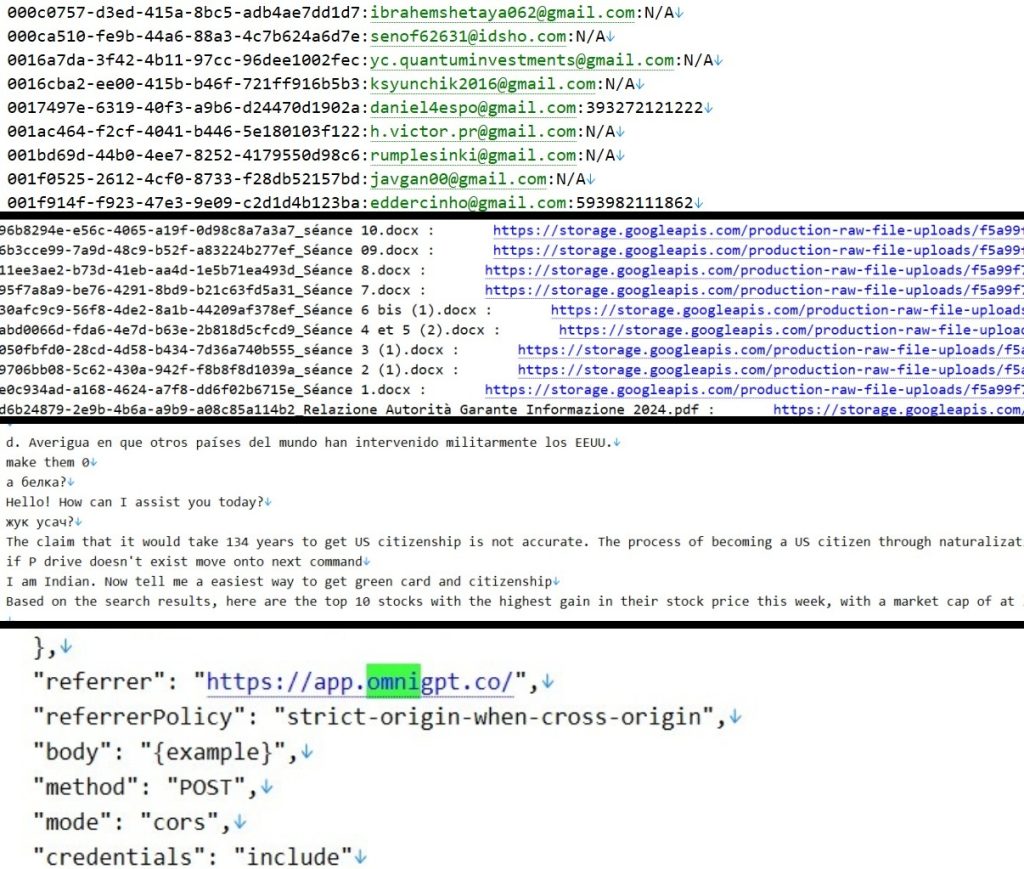

The leak, as analysed by the Hackread.com research team, includes messages exchanged between users and the chatbots and links to uploaded files, some of which contain credentials, billing information, and API keys. We also discovered over 8,000 email addresses that users shared with chatbots during conversations.

If confirmed, this breach would be one of the largest leaks of AI-generated conversation data, exposing users to identity theft, phishing scams, financial fraud and more. In a post accompanying the download link, the hacker stated:

“This leak contains all messages between the users and the chatbot of this site, as well as all links to the files uploaded by users and also 30k user emails. You can find a lot of useful information in the messages, such as API keys and credentials. Many of the files uploaded to this site are very interesting because sometimes they contain credentials/billing information.”

Sample Data: Messages, Files, and User Information

A sample from the leaked dataset includes chat messages from users discussing technical and development topics, such as:

? User: Kamil

“The cut duration is not displayed correctly.”

? User: Marcus

“Right now, I’m seeing it be accurate for a cut. How is it displaying incorrectly?”

? User: Javier

“This comes from review.display_data (edited).”

Along with message logs, the leak includes file upload links to documents stored on OmniGPT’s servers, which may contain sensitive information in PDF and Document format. Some of the leaked files include:

- Office projects

- University assignments

- Market Analysis Reports

- WhatsApp chat screenshots

- Police verification certificates

- Multiple personal and business-related documents

and a lot more.

Data Breach and GDPR

While the hacker’s modus operandi behind the alleged breach remains unclear, the leaked data suggests that OmniGPT has a global user base, with a major portion of the exposed information belonging to users from South America, particularly Brazil; Europe, especially Italy; and Asia, including India, Pakistan, China, and Saudi Arabia.

If confirmed, this breach could present serious legal and regulatory challenges, particularly concerning GDPR compliance in Europe, where strict data protection laws require companies to safeguard user information and report breaches promptly. Failure to address these issues could lead to heavy fines and legal repercussions, further complicating OmniGPT’s position in the global market.

Consequences: Identity Theft, Phishing, and API Key Exploitation

If the hacker’s claims are accurate, this leak could pose significant cybersecurity and privacy risks for OmniGPT users. Exposed email addresses and phone numbers may lead to targeted phishing attacks or increase the risk of identity theft.

Additionally, if users shared sensitive API keys or credentials in chatbot conversations, attackers could exploit this information to gain unauthorized access to third-party services or personal accounts. Furthermore, some of the leaked files may contain billing information or confidential business data, exposing companies to financial fraud, data theft, or corporate espionage.

In a comment to Hackread.com, Jason Soroko, Senior Fellow at Sectigo, a Scottsdale, Arizona-based certificate lifecycle management (CLM) provider, warned of unchecked advancements in AI, stating:

“OmniGPT’s breach highlights the risk that rapid AI innovation is outpacing basic security, neglecting privacy measures in favour of convenience. Unchecked progress in AI inevitably invites vulnerabilities that undermine user confidence and technological promise.“

Andrew Bolster, Senior R&D Manager at Black Duck, a Burlington, Massachusetts-based application security provider, also commented on the incident, warning that even AI experts working with cutting-edge Generative AI remain vulnerable to security breaches and must adhere to strict security practices.

“If confirmed, this OmniGPT hack demonstrates that even practitioners experimenting with bleeding-edge technology like Generative AI can still get penetrated, and that industry best practices around application security assessment, attestation and verification should be followed,“ Andrew said. “But what’s potentially most harrowing to these users is the nature of the deeply private and personal ‘conversations’ they have with these chatbots; chatbots are regularly being used as ‘artificial-agony-aunts’ for intimate personal, psychological, or financial questions that people are working through.“

Andrew also highlighted the critical risk to user privacy and psychological safety, emphasizing the need for ethical AI standards like IEEE 7014. “In that context, the complete compromise of these underlying conversations and file uploads represents a complete breach of OmniGPT’s customers’ privacy and their trust.“

“Anyone building Generative AI systems must ensure that they’re following secure software development policies, that is now an industry given; but they also have a responsibility to protecting their customers’ psychological safety as well, and should be making best efforts to follow the principles of guidance such as IEEE’s recently published, 7014 “Standard for Ethical Considerations in Emulated Empathy in Autonomous and Intelligent Systems,” he added.

OmniGPT’s Response: Silence So Far

As of now, OmniGPT has not issued an official response regarding the alleged breach. Hackead.com has contacted OmniGPT for a response, and this article will be updated as new information becomes available.

If you have an OmniGPT account, it is important to take immediate precautions to protect your data. Start by changing your passwords, especially if you have shared any credentials or sensitive information on the platform. Enabling two-factor authentication (2FA) adds an extra layer of security against unauthorized access.

Stay alert by monitoring your emails and financial accounts for any unusual activity, such as unauthorized logins or fraudulent transactions. Be cautious of phishing attempts, as cybercriminals may use leaked email addresses to send fake messages pretending to be from OmniGPT or other trusted sources. Also, if you have used OmniGPT to process API keys, consider revoking old tokens and generating new ones to prevent unauthorized access.

This is a developing story, and we will update it as more information becomes available.