Distributed systems (1) are critical to modern technology, but if they’re not properly optimized, they can sink your business. For over 10 years of optimizing large-scale systems, an e-commerce platform or a global data network, the right strategies make all the difference.

Ready to take your distributed systems to the next level? In this guide, I’ll show you how to implement proven best practices that eliminate bottlenecks, slash latency, and drive scalability. You’ll learn the simple steps that top tech companies use to stay ahead in a competitive market.

Let’s get started.

The Core Principles of Distributed Systems

Distributed systems refer to a network of independent computers that appear as a unified system to end-users. They can be spread across various locations but are designed to coordinate and work together to achieve common goals. At the heart of these systems are the principles of scalability, fault tolerance, and high availability.

| Concept | Definition | Key Design Principles | Strategies for Implementation | Best Practices |

|---|---|---|---|---|

| Scalability | The ability of a system to handle increased load or demand and expand to accommodate growth. | - Horizontal scaling (adding more machines) - Vertical scaling (upgrading existing resources) | - Load balancing - Sharding of databases - Distributed systems | - Use cloud services for dynamic scaling - Implement resource optimization techniques (e.g., elastic compute) |

| Fault Tolerance | A system’s capacity to continue operating properly despite the failure of some of its components. | - Redundancy - Self-healing mechanisms - Diversification of components | - Automated failover mechanisms - Clustering (active-active, active-passive) - Database replication | - Implement failover testing - Monitor system health and automate recovery processes |

| High Availability | Ensures continuous system operation, even in the face of disruptions, often achieved through redundancy and failover mechanisms. | - Redundancy - Diversity in components - Fail-safe defaults - Self-healing systems - Continuous monitoring | - Cloud-based services - Database replication - Load balancing - Redundant network and power configurations | - Implement regular backups - Use automated alerts to ensure uptime - Design for 99.999% availability |

| Redundancy | Duplicating critical components or systems to avoid single points of failure. | - Multiple servers - Replicated databases - Redundant power and networking setups | - Use of redundant power supplies - Implement geographic failover clusters | - Regularly test redundancy systems - Monitor redundancies to ensure they are working as expected |

| Self-Healing | The system’s ability to automatically detect failures and restore normal operations without human intervention. | - Automatic recovery from failures - Automated restarts - Health checks and status monitoring | - Continuous automated system monitoring - Use of orchestration tools (e.g., Kubernetes) for recovery | - Ensure automatic restoration is fast and efficient - Conduct failure simulations regularly |

| Monitoring & Feedback | Continuous tracking of system performance, which enables quick identification of failures and system issues. | - Real-time monitoring of system metrics (CPU, memory, etc.) - Logging and alerting mechanisms | - Implement observability tools (e.g., Prometheus, Grafana) - Continuous integration and automated testing tools | - Implement alerting thresholds - Use machine learning to predict failures before they happen |

Scalability Challenges in Distributed Systems

Scalability is a critical challenge when designing and deploying distributed systems, particularly as data volumes increase and real-time processing becomes more essential. Scaling a system means expanding its capacity to handle increasing loads; whether it’s users, transactions, or data.

Elasticity in the Cloud

One of the primary ways organizations scale distributed systems is by utilizing cloud infrastructure, which provides the necessary resources to scale up or down in real-time. Public cloud platforms like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer services such as auto-scaling, load balancing, and elastic computing, which dynamically adjust resources based on demand.

For example, AWS Lambda, a serverless computing service, allows developers to run code in response to events and automatically manage the scaling of resources. This means that as user demand increases, additional instances of a function can be automatically deployed without manual intervention.

Moreover, Google Cloud has incorporated technologies like Kubernetes, a container orchestration platform, to manage containerized applications at scale. Kubernetes automates the deployment, scaling, and operation of application containers, ensuring that they can be managed efficiently across a range of environments.

The Power of Edge Computing: Fog Architecture

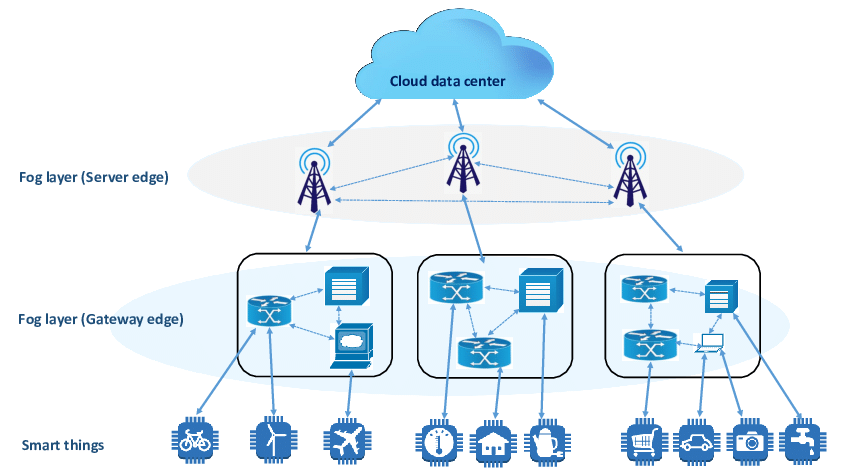

While the cloud provides incredible scalability, it also introduces challenges related to latency, bandwidth, and network reliability. As data processing moves from the cloud to the edge of the network, a new architecture called Fog Computing is gaining traction. Fog computing, an extension of cloud computing, brings computation, storage, and networking closer to data sources, such as IoT devices, sensors, and autonomous systems.

For instance, in industrial automation, fog nodes (2) can process data at the edge of the network, reducing the need for data to be sent to the cloud for analysis. This is particularly valuable when real-time decisions are required, such as in medical monitoring or autonomous vehicle systems. As Cisco points out, this local processing can significantly reduce network congestion, lower latency, and enhance resilience in mission-critical applications.

Fog computing is already being used in IoT applications, where real-time data analytics are required. In these cases, it makes more sense to preprocess the data locally at the edge and send only the most relevant information to the cloud. For example, connected vehicles use fog computing to process sensor data locally, ensuring that responses to critical events are almost instantaneous.

Balancing Cloud and Edge: A Hybrid Approach

In many scenarios, a hybrid approach, combining both cloud and edge computing, provides the best of both worlds. This cloud-edge integration allows for the heavy-lifting computational tasks to be handled by the cloud while utilizing edge devices for processing time-sensitive data.

For instance, Amazon’s Greengrass extends AWS cloud capabilities to the edge, allowing users to build applications that can operate even when disconnected from the cloud. This hybrid model also optimizes network bandwidth by reducing the volume of data sent to the cloud. For machine learning applications, Greengrass enables local inference and model updates at the edge while still benefiting from cloud scalability.

Design Patterns for Scalable Distributed Systems

Building a scalable and performant distributed system requires careful attention to architecture. Several design patterns have emerged as best practices for ensuring scalability and resilience.

Microservices Architecture

A microservices architecture (3) is a design pattern that divides a large, monolithic application into smaller, independent services that can be developed, deployed, and scaled independently. Each microservice typically represents a business capability, such as user authentication or payment processing, and communicates with other microservices via APIs.

One key advantage of microservices is their ability to scale independently. For example, during peak traffic periods, a service responsible for user authentication can be scaled up without affecting other services, such as those handling data storage.

Another example is Netflix, which employs microservices for its content delivery system. To ensure performance at a global scale (4), Netflix utilizes Chaos Engineering, a practice of intentionally injecting faults into the system to ensure that failure recovery mechanisms are effective.

Sharding and Partitioning

When dealing with large datasets, sharding and partitioning become essential techniques. These approaches split data into smaller chunks or “shards,” which are distributed across different servers. Each shard can be scaled independently, ensuring that a single server doesn’t become a bottleneck.

For example, Cassandra, a distributed NoSQL database, uses sharding to partition data across nodes. It scales horizontally by adding new nodes to the cluster, which automatically balances the load and replicates data for fault tolerance. This technique ensures high availability and responsiveness, even as data volumes grow.

The Role of Fault Tolerance in Scaling Distributed Systems

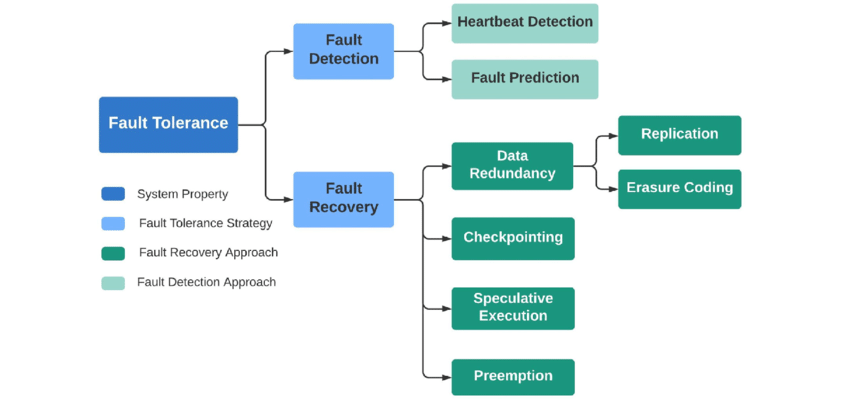

When scaling distributed systems, fault tolerance becomes an essential consideration. A distributed system must be able to continue operating even if one or more components fail.

Techniques like replication, redundancy, and circuit breakers are commonly used to enhance fault tolerance.

“Scaling distributed systems is not just about adding more resources, but designing for resilience, redundancy, and continuous growth without compromising performance or availability.” (5)

Replication and Redundancy

Replication involves creating copies of data across different servers or locations to ensure that if one server fails, others can take over. This is a common practice in distributed databases such as Cassandra (6) and MongoDB, where data is replicated across multiple nodes to ensure consistency and availability.

In addition to data replication, services and infrastructure components are also typically replicated in multiple availability zones or regions to ensure resilience. For instance, AWS offers multi-AZ deployment options, where critical systems are deployed across multiple availability zones to prevent downtime in case of localized failures.

Circuit Breakers and Graceful Degradation

A circuit breaker (7) is a pattern used to detect failures and prevent a system from attempting to execute an action that is likely to fail. When a failure is detected, the system gracefully degrades by halting requests to the failing component and rerouting traffic to healthy instances.

Hystrix, for instance, a fault tolerance library from Netflix (8), implements the circuit breaker pattern to ensure that their distributed services remain resilient even when individual components fail.

To ensure optimal performance in a scalable distributed system, it’s important to continuously monitor and optimize key performance indicators (KPIs), such as latency, throughput, and error rates.

Implementing real-time monitoring tools and log aggregation systems, such as Prometheus, Grafana, and ELK Stack, enables engineers to proactively identify performance bottlenecks and make adjustments.

Additionally, performance tuning techniques, such as caching, data compression, and content delivery networks (CDNs), help to further reduce latency and enhance user experience.

Caching for Performance

Caching reduces the load on backend systems by temporarily storing frequently accessed data in a fast-access storage layer. Redis and Memcached are popular caching tools that can significantly reduce database load and improve response times.

For example, Cloudflare’s CDN caches static content close to the user, reducing the distance data has to travel and thereby improving site load times.

The Future of Scalability and Resilience Distributed Systems

The future of distributed systems will be driven by autonomous resilience, AI-powered optimizations, and serverless distributed architectures. AI-driven self-healing systems will predict failures, analyze root causes, and auto-correct issues in real time using reinforcement learning, anomaly detection, and predictive analytics. These systems will dynamically reconfigure resources, rollback faulty deployments, and optimize traffic routing, reducing downtime and operational intervention.

Serverless multi-region failovers will simplify disaster recovery by automatically shifting traffic to healthy locations during failures. Global load balancing and real-time data replication will ensure minimal downtime and data loss while keeping operations smooth and cost-efficient.

Conclusion

Building distributed systems that can handle the complexities of modern-day computing, scaling dynamically, maintaining fault tolerance, and ensuring high performance; requires an in-depth understanding of both architecture and technology. By leveraging cloud computing, edge technologies, and best practices such as microservices, sharding, and load balancing, organizations can create systems that not only scale but perform at the highest levels.

As the tech landscape continues to evolve, integrating cloud and edge technologies with hybrid architectures will play a pivotal role in shaping the future of distributed systems. The real-world case studies from companies like Amazon, Netflix, and Meta provide invaluable lessons on how to tackle these challenges effectively.

By following these principles and best practices, organizations can build resilient, scalable systems capable of handling the increasing demands of today’s data-driven world.

References

(1) GeeksforGeeks. (2023). Distributed systems overview.

https://media.geeksforgeeks.org/wp-content/uploads/20230331120006/Distributed-System.png

(2) Pointnity Network. (2021). Scaling distributed systems: Fog-Edge computing architecture.

https://www.researchgate.net/figure/Fog-Edge-computing-architecture_fig1_348226556

(3) IEEE Xplore. (2017). Research on Architecting Microservices: Trends, Focus, and Potential for Industrial Adoption.

https://ieeexplore.ieee.org/document/7930195

(4) Netflix Tech Blog. (2015). Case studies on microservices, performance tuning, and reliability.

http://techblog.netflix.com/2015/02/a-microscope-on-microservices.html

(5) Neuroquantology. (2013). The Role of Distributed Systems in Cloud Computing: Scalability, Efficiency, and Resilience

https://bit.ly/Role-of-Distributed-Systems-Cloud-Computing

(6) Instaclustr. (2020). Apache Cassandra Data Modeling Best Practices Guide.

https://www.instaclustr.com/blog/cassandra-data-modeling/

(7) Investopedia. (2022). Circuit breaker.

https://www.investopedia.com/terms/c/circuitbreaker.asp

(8) Netflix Tech Blog. (2014). Introducing Hystrix for resilience engineering.

https://netflixtechblog.com/introducing-hystrix-for-resilience-engineering-13531c1ab362

(9) Research Gate. (2021). Fault tolerance in big data storage and processing systems: A review on challenges and solutions.

https://www.researchgate.net/figure/Classification-of-fault-tolerance-approaches_fig1_353379321

The views and opinions expressed in this document are solely his own and do not reflect those of Uber.

Top/Featured Image via Unsplash