Pro tip: Do not upload images of your children on the internet, including your social media profile, even if your friends list consists of people you know or trust.

RELATED ARTICLES

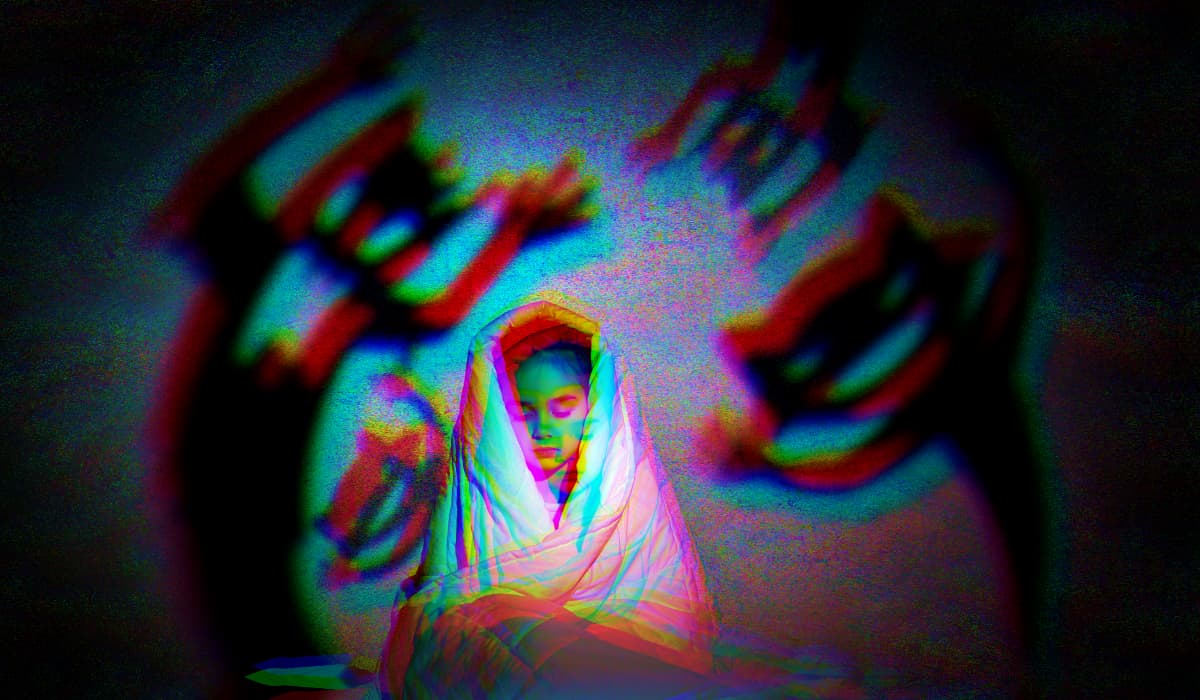

- The availability and sophistication of generative AI platforms is a growing issue of concern. Cybercriminals can easily manipulate videos, images, and audio to create deep fake content.

- A UK-based internet watchdog has shared startling details of how paedophiles have switched to generative AI to create child sexual abuse content.

- Research revealed that paedophiles use freely available AI software to create illegal child pornographic material.

- The Internet Watch Foundation observed that paedophiles are openly discussing how to modify the software to manipulate children’s photographs on the dark web.

- This can make it challenging to help real-life victims.

We all know OpenAI’s ChatGPT chatbot. You may also be aware that cybercriminals have developed malicious alternatives of ChatGPT known in the cybersecurity community as FraudGPT and WormGPT. The bottom line is that cybercriminals will go to great lengths to turn the power of Artificial Intelligence (AI) to their advantage for malicious purposes.

Now, according to a report published by a UK-based internet watchdog, the Internet Watch Foundation (IWF), cybercriminals are turning to generative AI platforms to create child sexual abuse material (CSAM).

As per the research from IWF, cybercriminals’ increasing preference to create deep fake content fabricating people or events is a concerning issue because of the sheer scope of damage it can cause. The problem becomes more concerning when it involves minors, particularly children. The ease with which sex offenders can create this imagery can also encourage its widespread acceptability.

The IWF found that there’s been a sudden surge in AI-generated child sexual abuse content available online. The organization noticed discussions among paedophile rings on dark web forums frequently used by sex offenders regarding tips on creating fake images of children via open-source AI models.

Child safety experts and law enforcement agencies are afraid that the availability of realistic photos will make it challenging to help real-life victims of child sexual abuse. It must be noted that creating photorealistic content is illegal in the UK.

Open-source AI models differ from closed AI models like Google’s Imagen, as anyone can download them. It is easier to use these models because they can download and run them on PCs, and there’s no need to run them on the cloud. So, they can evade the controls and detection tools on the cloud and distribute the content online more regularly than they do now.

Offenders can use a basic source image-generating model containing billions of tagged images and fine-tune the images with child sexual abuse images to create a similar model with low-rank adaptation. Hence, with minimal computation, they can produce realistic images and mislead law enforcement by diverting their focus on child victims that don’t exist.

Earlier, the IWF’s chief executive, Susie Hargreaves OBE, had warned the government regarding the seriousness of this issue.

In May 2023, the Internet Watch Foundation (IWF) initiated the recording of instances involving AI-generated child sexual abuse material, marking a significant development for its hotline. Over the span of May 24 to June 30, the IWF diligently investigated 29 reports encompassing URLs suspected of housing AI-generated child sexual abuse imagery. These reports came from concerned members of the public.

Among these cases, the IWF successfully verified that seven of the reported URLs indeed contained AI-generated child sexual abuse imagery. Notably, these URLs often hosted multiple images, which could be entirely AI-generated or a combination of AI-generated and authentic content.

Disturbingly, the addressed URLs covered a distressing range of material, spanning from Category A to Category B, and depicted children as young as 3 to 6 years old, comprising both female and male victims.

“We are not currently seeing these images in huge numbers, but it is clear to us the potential exists for criminals to produce unprecedented quantities of life-like child sexual abuse imagery. This would be potentially devastating for internet safety and for the safety of children online. We have a chance, now, to get ahead of this emerging technology, but legislation needs to be taking this into account and must be fit for purpose in the light of this new threat,” Hargreaves had said.

The head of the UK government’s AI task force, Ian Hogarth, had raised red flags about child sexual abuse material created using open-source AI models, claiming that it was amongst the “most heinous things out there.”

The IWF chief Dan Sexton regarded this as a “public health epidemic” that will get only worse as cybercriminals switch their focus on generative AI technology.

The IWF is a non-profit organization founded in 1996 to monitor the internet for sexual abuse content and receive tips about such content, especially involving children.

How to Protect your Child and their Photos from Malicious Elements on Dark Web?

Here are 10 ways parents can protect their children and their photos from exposure to the dark web and individuals with malicious intent:

- Educate Your Children: Teach your kids about online safety, including the importance of not sharing personal information, photos, or engaging with strangers online.

- Use Privacy Settings: Adjust the privacy settings on social media platforms to ensure that only trusted contacts can view your child’s content.

- Regularly Check Friend Lists: Encourage your child to regularly review their friend lists and followers on social media and remove anyone they don’t know in real life.

- Watermark Photos: If you choose to share photos of your children online, consider watermarking them to deter unauthorized use.

- Limit Location Sharing: Disable location sharing on your child’s devices and social media accounts to prevent tracking by malicious individuals.

- Use Secure Devices: Ensure your child’s devices have up-to-date security software and are protected with strong passwords or biometrics.

- Teach Responsible Posting: Encourage responsible posting by discussing the potential consequences of oversharing personal information or photos.

- Monitor Online Activity: Keep an eye on your child’s online activity without being overly invasive. Use parental control software if necessary. Use parental control apps!

- Promote Open Communication: Create an environment where your child feels comfortable discussing any online interactions or concerns they may have.

- Report Suspicious Activity: Teach your child how to recognize and report suspicious behavior or content to both you and the relevant authorities.