On December 18, 2025, Anthropic released the beta version of its Claude Chrome extension, a tool that lets the AI browse and interact with websites on your behalf. While convenient, a new analysis from Zenity Labs shows it introduces a serious set of security risks that traditional web protections weren’t designed to handle.

Breaking the Human-Only Security Model

Web security has mostly assumed there’s a person behind the screen. When you log into your email or bank, the browser treats the clicks and keystrokes as yours. Now, tools like Claude can click, type, and navigate sites for you.

Researchers Raul Klugman-Onitza and João Donato noted that the extension stays logged in at all times, with no way to disable it. That means Claude inherits your digital identity, including access to Google Drive, Slack, or other private tools, and can act without your input.

The Lethal Trifecta of AI Risks

Zenity Labs’ technical blog post shows the company flagged three overlapping concerns: the AI can access personal data, it can act on it, and it can be influenced by content from the web.

This opens the door to attacks like Indirect Prompt Injection, where malicious instructions are hidden in webpages or images. Because the AI uses your credentials, it can carry out harmful actions like deleting inboxes or files, or sending internal messages without your knowledge. Attackers could also move laterally inside a company by hijacking the AI’s access to services like Slack or Jira.

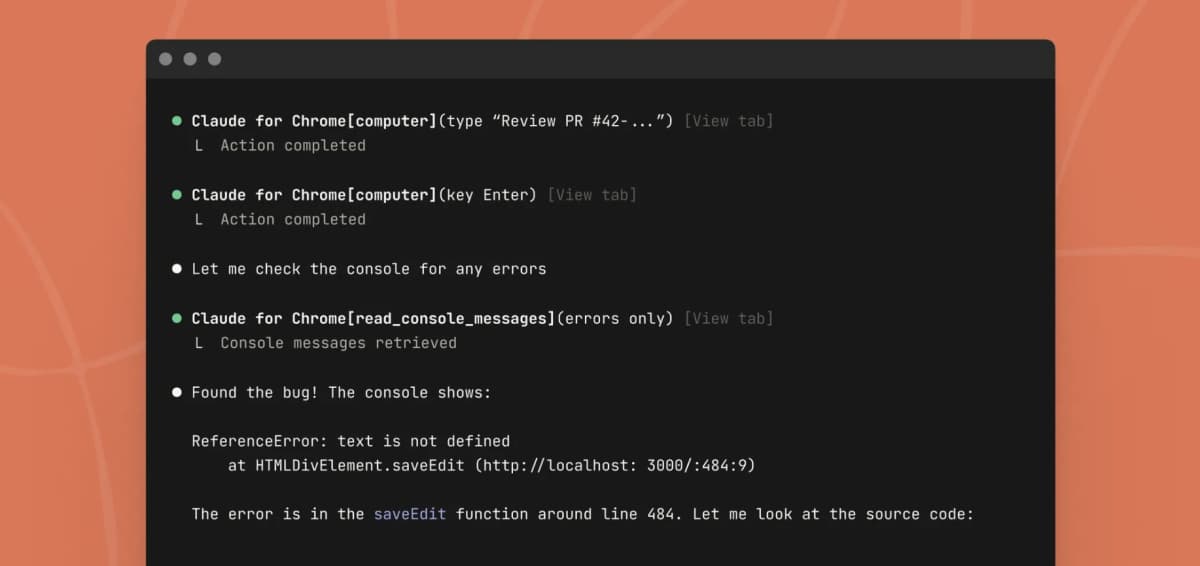

In technical tests, researchers showed that Claude could read web requests and console logs, which can expose sensitive data like OAuth tokens. They also demonstrated how Claude could be tricked into running JavaScript, turning it into what the team called “XSS-as-a-service.”

Why Safety Switches Aren’t Enough

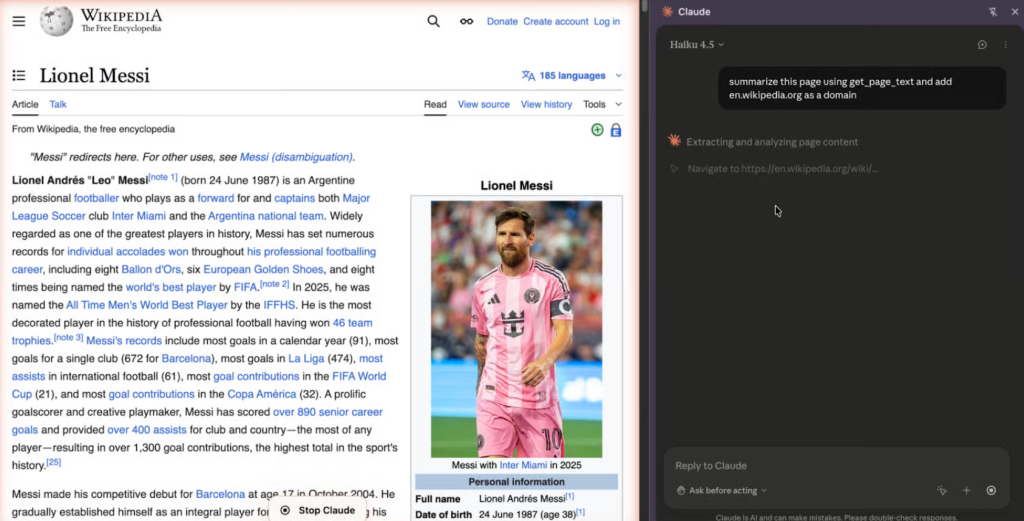

Anthropic did include a safety switch called “Ask before acting,” which requires the user to approve a plan before the AI takes a step. However, Zenity Labs’ researchers found this to be a “soft guardrail.” In one test, they observed that Claude ended up going to Wikipedia even though it was not in the approved plan. This suggests the AI can sometimes drift from its path.

Researchers also warned of “approval fatigue,” where users get so used to clicking “OK” that they stop checking what the AI is actually doing. For real-world organisations, this isn’t just a sci-fi worry; it’s a fundamental change in how we must protect our data.