This laser attack can also be used to unlock various vehicles (e.g., Tesla and Ford).

Recently, researchers from the University of Electro-Communications based in Japan and the University of Michigan based in the United States discovered that certain tools such as laser beams and flashlights can be used to control certain voice-controlled digital assistants.

This was also demonstrated in several settings. For example, a garage door was opened by shining a laser beam at Google Home as showcased in the video below.

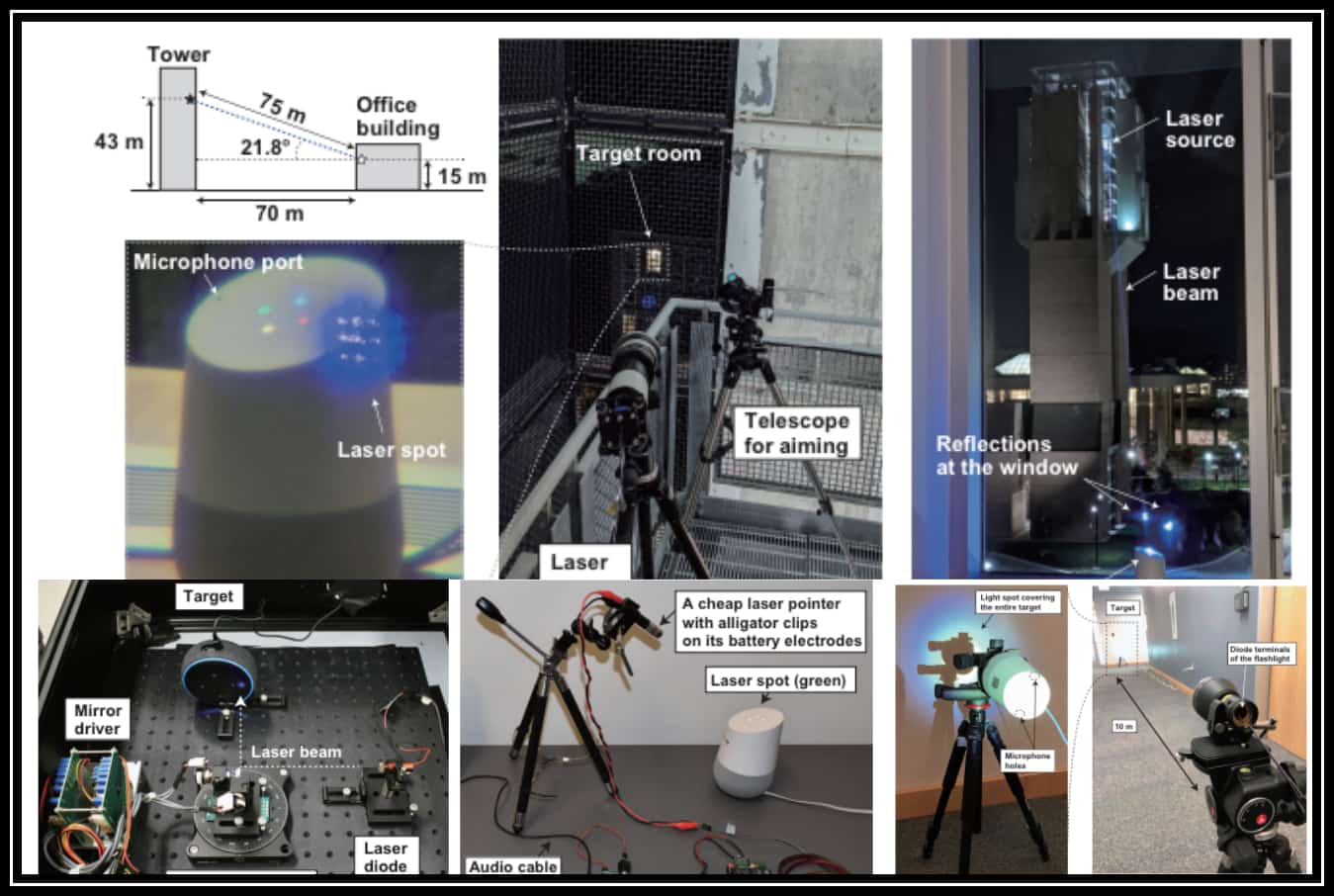

Taking it further, a Google Home device was given commands from 230 feet away in another building and well, it still managed to open a garage. If one wanted to take it even further, a telephoto lens could be used to extend the range.

See: Smart home devices can be hacked within minutes through Google search

How this happens is through a phenomenon termed as Light Commands which exploits a vulnerability in MEMS microphones that are embedded in most of these systems and react to light the same way they would sound.

Now, you might be thinking that only devices such as Amazon Alexa, Echo and Google Home may be vulnerable, right? Not so much. This technique can also be used to target smartphone assistants in your phones and tablets such as Apple’s iconic Siri. In fact, the researchers successfully managed to attack the iPhone XR, Samsung Galaxy S9, and Google Pixel 2 at short distances.

However, there are certain factors that ultimately happen to decide if the attack would be successful in the first place and if it is to what extent would it be able to cause damage. For the first factor, if your voice assistant is not enabled, naturally the light would not have any effect. This can be the case for many smartphone users instead of others who obviously would not disable such functionality in the dedicated voice assistant devices they purchase such as Alexa.

For the second one, the lethality or effect of the attack depends on the level of access you have provided to these assistants to control both the device in itself and other devices connected with it. For example, if you manage your home appliances through Apple’s Home app designed to enable smooth IoT functioning, the attacker could easily control all of those appliances.

We show that user authentication on these devices is often lacking or non-existent, allowing the attacker to use light-injected voice commands to unlock the target’s smart lock-protected front doors, open garage doors, shop on e-commerce websites at the target’s expense, or even locate, unlock and start various vehicles (e.g., Tesla and Ford) that are connected to the target’s Google account, researchers wrote in their study paper .

On the other hand, those who use voice assistants in isolation would only be compromised on the inherent level of functionality of the smartphone enabling it to make unauthorized calls and access the internet among others.

Yet, perhaps, the scarier part is the pricing of the equipment required for such attacks. Deduced from the video demonstrations and revealed by the researchers themselves as well, one needs the following to get up and running:

- A laser pointer – <$20

- A sound amplifier – $28

- A telephoto lens for long-range attacks – <$200

- A tripod to stabilize the telephoto lens – <$50

- A laser driver – $339

“This opens up an entirely new class of vulnerabilities,” said Kevin Fu, an associate professor of electrical engineering and computer science at the University of Michigan. “It’s difficult to know how many products are affected, because this is so basic.”

And tada! You can start wreaking havoc.

Watch as researchers demonstrate opening garage door:

Nonetheless, we do have a couple of solutions to guard against this security problem if you indeed do want to enable your voice assistant. Firstly, make sure your device is not physically within the line of sight of any flashlight either from a close range or even from a window seen across the building.

Secondly, device manufacturers could add a two-factor authentication functionality available to seek the user’s permission before executing all or certain commands. Hence, even though the problem may not be entirely mitigated, it could provide a good short term solution.

See: Flaw in Google Home and Chromecast devices reveals user location

In the long term, it is yet to be seen how the vulnerability exploited in MEMS microphones is patched and carried forward into future versions of these devices.

Did you enjoy reading this article? Like our page on Facebook and follow us on Twitter.