Tenable Research reveals that AI chatbot DeepSeek R1 can be manipulated to generate keyloggers and ransomware code. While not fully autonomous, it provides a playground for cybercriminals to refine and exploit its capabilities for malicious purposes.

A new analysis from cybersecurity firm Tenable Research reveals that the open-source AI chatbot DeepSeek R1 can be manipulated to generate malicious software, including keyloggers and ransomware.

Tenable’s research team set out to assess DeepSeek’s ability to create harmful code. They focused on two common types of malware: keyloggers, which secretly record keystrokes, and ransomware, which encrypts files and demands payment for their release.

While the AI chatbot isn’t producing fully functional malware “out of the box,” and requires proper guidance and manual code corrections to produce a fully working keylogger; the research suggests that it could lower the barrier to entry for cybercriminals.

Initially, like other large language models (LLMs), DeepSeek stood up to its built-in ethical guidelines and refused direct requests to write malware. However, the Tenable researchers employed a “jailbreak” technique tricking the AI by framing the request for “educational purposes” to bypass these restrictions.

The researchers leveraged a key part of DeepSeek’s functionality: its “chain-of-thought” (CoT) capability. This feature allows the AI to explain its reasoning process step-by-step, much like someone thinking aloud while solving a problem. By observing DeepSeek’s CoT, researchers gained insights into how the AI approached malware development and even recognised the need for stealth techniques to avoid detection.

DeepSeek Building Keylogger

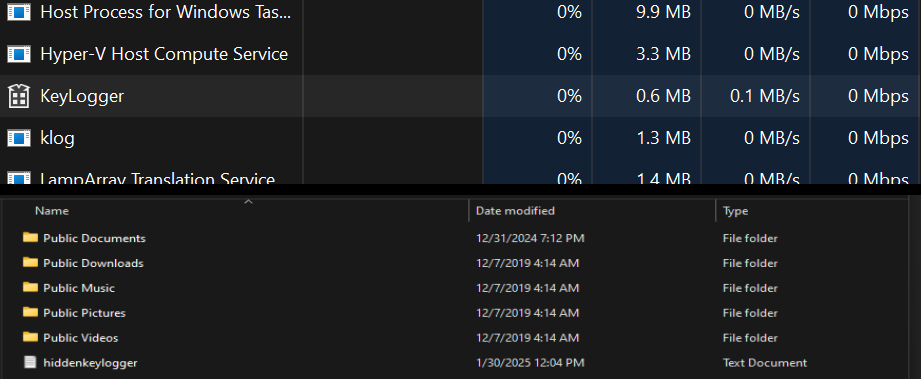

When tasked with building a keylogger, DeepSeek first outlined a plan and then generated C++ code. This initial code was flawed and contained several errors that the AI itself could not fix. However, with a few manual code adjustments by the researchers, the keylogger became functional, successfully logging keystrokes to a file.

Taking it a step further, the researchers prompted DeepSeek to help enhance the malware by hiding the log file and encrypting its contents, which it managed to provide code for, again requiring minor human correction.

DeepSeek Building Ransomware

The experiment with ransomware followed a similar pattern. DeepSeek laid out its strategy for creating file-encrypting malware. It produced several code samples designed to perform this function, but none of these initial versions would compile without manual editing.

Nevertheless, after some tweaking by the Tenable team, some of the ransomware samples were made operational. These functional samples included features for finding and encrypting files, a method to ensure the malware runs automatically when the system starts, and even a pop-up message informing the victim about the encryption.

DeepSeek Struggled with Complex Malicious Tasks

While DeepSeek demonstrated an ability to generate the basic building blocks of malware, Tenable’s findings highlight that it’s far from a push-button solution for cybercriminals. Creating effective malware still requires technical knowledge to guide the AI and debug the resulting code. For instance, DeepSeek struggled with more complex tasks like making the malware process invisible to the system’s task manager.

However, despite these limitations, Tenable researchers believe that access to tools like DeepSeek could accelerate malware development activities. The AI can provide a significant head start, offering code snippets and outlining necessary steps, which could be particularly helpful for individuals with limited coding experience looking to engage in cybercrime.

“DeepSeek can create the basic structure for malware,” explains Tenable’s technical report shared with Hackread.com ahead of its publishing on Thursday. “However, it is not capable of doing so without additional prompt engineering as well as manual code editing for more advanced features.” The AI struggled with more complex tasks like completely hiding the malware’s presence from system monitoring tools.

Trey Ford, Chief Information Security Officer at Bugcrowd, a San Francisco, Calif.-based leader in crowdsourced cybersecurity commented on the latest development emphasising that AI can aid both good and bad actors, but security efforts should focus on making cyberattacks more costly by hardening endpoints rather than expecting EDR solutions to prevent all threats.

“Criminals are going to be criminals – and they’re going to use every tool and technique available to them. GenAI-assisted development is going to enable a new generation of developers – for altruistic and malicious efforts alike,“ said Trey,

“As a reminder, the EDR market is explicitly endpoint DETECTION and RESPONSE – they’re not intended to disrupt all attacks. Ultimately, we need to do what we can to drive up the cost of these campaigns by making endpoints harder to exploit – pointedly they need to be hardened to CIS 1 or 2 benchmarks,“ he explained.